With the rise of mental health awareness, the number of people embarking on a journey of self-discovery and healing has risen as well. We are witnessing a wonderful shift in the way the world views mental health issues. Though there always will be groups of people who will continue to perpetuate stigma through their skewed beliefs. As a society, we have reached a common understanding of how important good mental health is.

However, with the increasing numbers in people seeking help, there is a noticeable lack in the resources providing support and solutions. What happens when the demand is greater than the supply? Patients vastly outnumber doctors, costs skyrocket, as do waiting times – the solution? Well, surprisingly enough, emotional support chatbots appear to be perfect for the job.

Image created by Possessed Photography

Image created by Possessed Photography

What is an Emotional Support Chatbot?

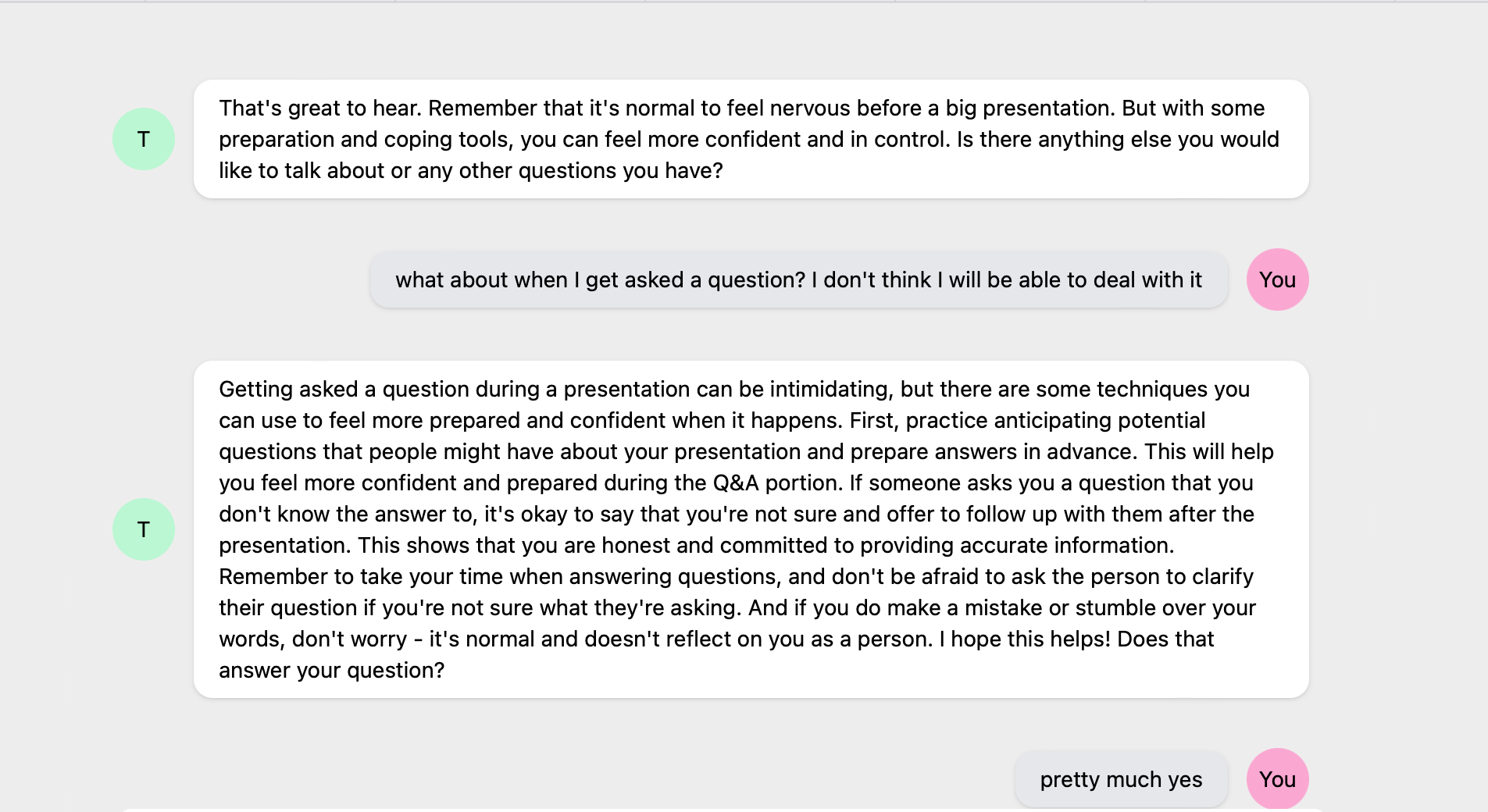

Chatbots are the newest cutting-edge technology that is about to change the world. According to Oxford Dictionary, a chatbot is a simpler version of an AI – a computer program designed to simulate conversations with human users over the Internet.

From being used as search engines to streamlining personal services, now chatbots are entering the health care system at alarming rates. AI developers are claiming that chatbots are an innovative way to tackle mental health issues and functional wellbeing. A lot of health specialists are supportive of this initiative.

The world has never been in a more dire need of mental health professionals. And while chatbots aren’t meant to replace human therapists (not yet at least), they should act as neutral listeners which can help you talk through your problems and refer you to real doctors if needed.

Advantages of Chatbots

One of the perks of chatbots is that you don’t have to talk to people. A lot of people experience severe anxiety and worry during their appointments with a specialist. Especially when it comes to intimate topics such as their mental health. A chatbot is able to remove all these feelings of uncertainty, because it is a computer program you’re talking to, instead of a real person who is capable of expressing stigma, judgement, and disapproval. And one of the biggest fears all humans have is feeling invalidated for our feelings and experiences.

Furthermore, emotional support chatbots aren’t just normal AI controlled programs which only function as a search engine. Supportive companion chatbots are usually programmed with the help of psychologists trained in cognitive behavior therapy. They analyze word choice and phrases to deliver not only comforting messages, but also advise on grief, stress, chronic pain, etc. Essentially, these chatbots can potentially tackle down issues at their early stages. They aren’t able to respond in emergency situations and will immediately refer away from themselves and to a human specialist in response to alarming texts.

In an interview with Bloomberg, Mustafa Suleyman, one of the creators of the better chatbot companion called PI(Personal Intelligence), shares that, “Many people feel like they just want to be heard and they just want a tool that reflects back what they said to demonstrate they have actually been heard.”

One of the biggest issue these chatbots can be a solution to is affordability and logistics. For the time being, chatbots aren’t only free to use, but there are easily accessible from the palm of your hand.

However, how smart is it to integrate technology in yet another aspect of our lives?

Robert Wagner, CC BY 4.0 , via Wikimedia Commons

Robert Wagner, CC BY 4.0 , via Wikimedia Commons

The Downsides

How reliable is such technology to be dealing with people in mental distress?

AI is still in its early ages of development. It should be considered neglect on the behalf of health professionals to be referring patients to chatbots to help with their mental anguish.

For once, allowing chatbots to function as pseudo therapists and psychoanalyze people with serious mental health issues sounds like the textbook definition of a ‘risk’. Not only aren’t algorithms at a developed stage where they can emulate complex human emotions, but they can also become a liability. Computers are unable to mimic empathic care and can seriously misunderstand people leading to wrongly generating damaging messages.

Chatbots run the risk to further the stigma against therapy and getting mental health support. AI-driven therapy doesn’t only lack in human interactions. It is also absent of human sympathy, empathy and understanding. Chatbots may be programmed to mimic human writing, but longer and deeper conversations are bound to feel empty and sterile. A patient being first exposed to a chatbot as a resource for support, and has a dissatisfying experience, will be in their full right to refuse a proper therapy with a human being.

And all of this doesn’t even begin to scratch the areas where problems may occur. Disclosing private and sensitive information such as your mental health state to an online program can lead to data leaks and result in serious privacy issues.

Such challenges should not be taken lightly when considering using emotional support chatbots.

The Dawn of AI

There is a certain irony in emotional support chatbots. Not only the fact that such technology already exists, but also that AI is becoming more and more sophisticated, is anxiety-inducing on its own. How far has humanity come that we are able to program empathy onto a software and allow it to handle sensitive issues? But still refer to apathetic people as robots?

And where exactly do we draw the line?

There are conversations taking place regarding using chatbot operated apps to notify doctors when patients are skipping their medication. Or monitoring people’s body language, behavior, and emotional state during sessions and keeping this data for future reference and diagnosis.

Whereas this may seem appealing to some, others will consider it violating.

The truth is, AI operated chatbots are still a newly developed technology which remains an under researched field. Especially, the effect it can have on people using it as a support companion. A lot can get wrong. And the privacy breaches can become the least of our worries. Maybe in a couple of decades humanity will be able to witness the dawn of AI in its full potential. Where using chatbots as a mental health support will be completely safe.

There are a lot of ways in which we can use AI to better our societies but until we’re able to cross this bridge safely, we should ask ourselves – who bears the responsibility if the technology goes wrong?